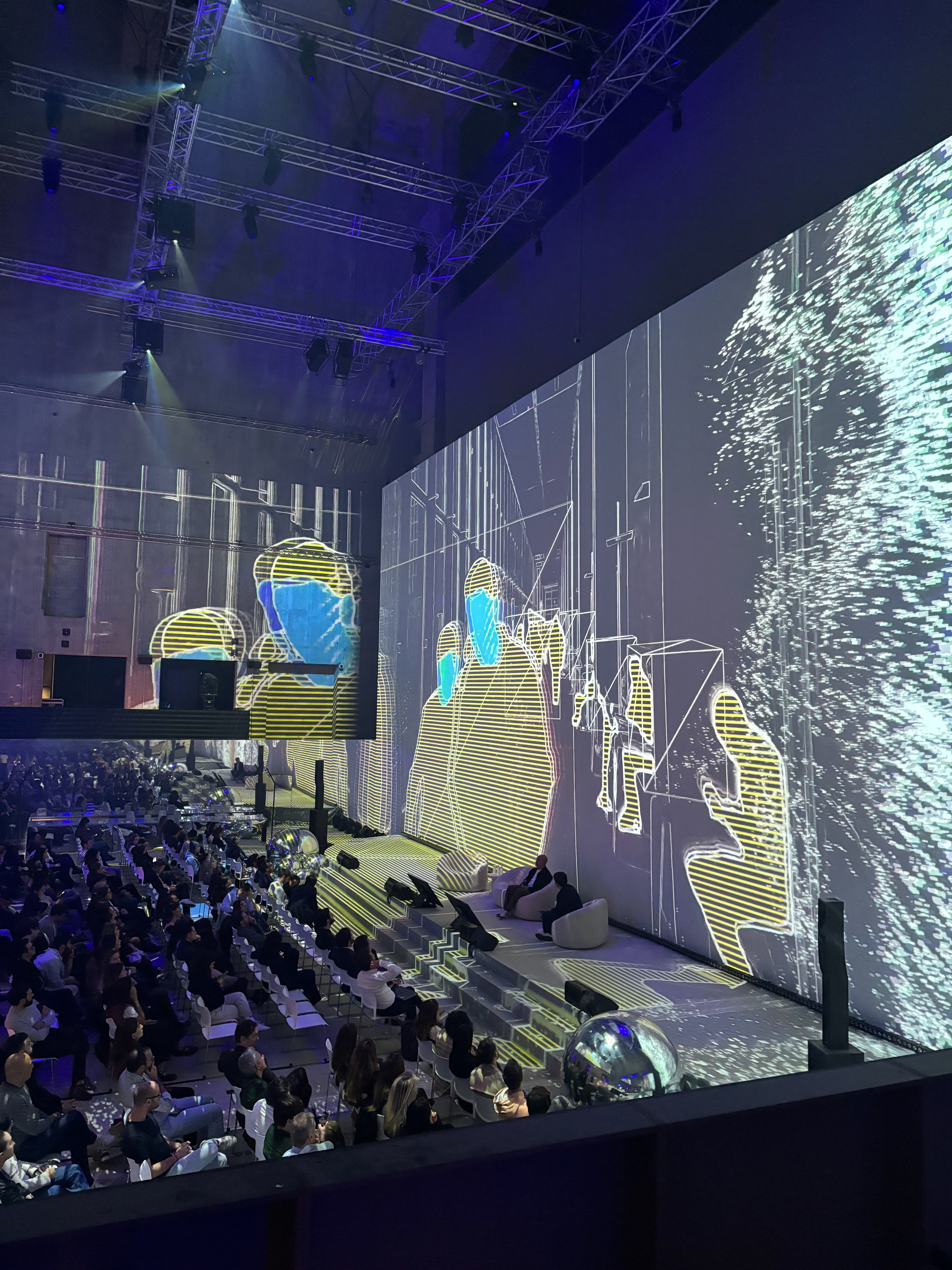

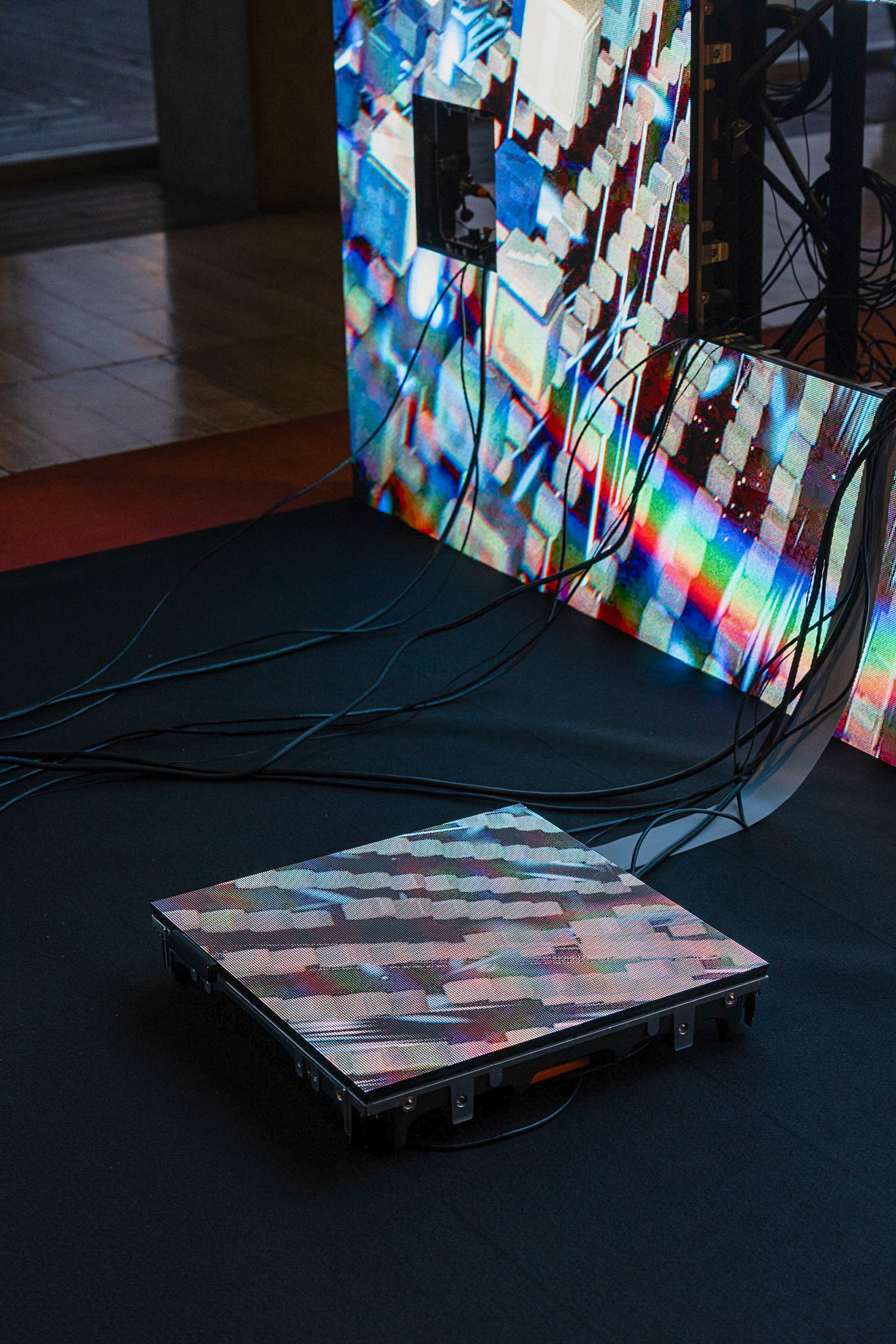

Deep Diving is an exploration of the hidden rhythm of knowledge transmission — how machines perceive, interpret, and relate to human presence. The piece was showcased at the Bibliothèque nationale de France during the AI Action Summit in Paris.

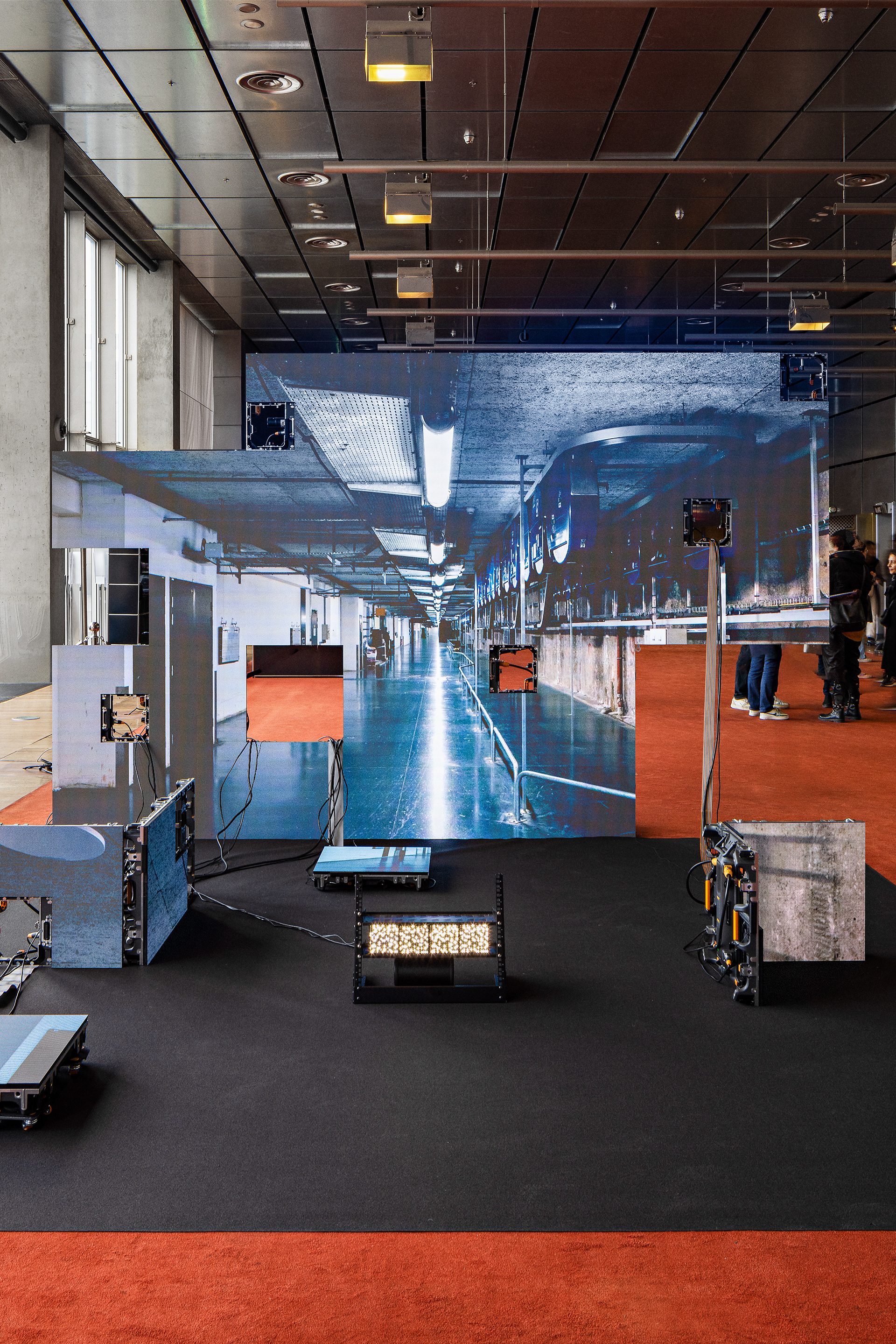

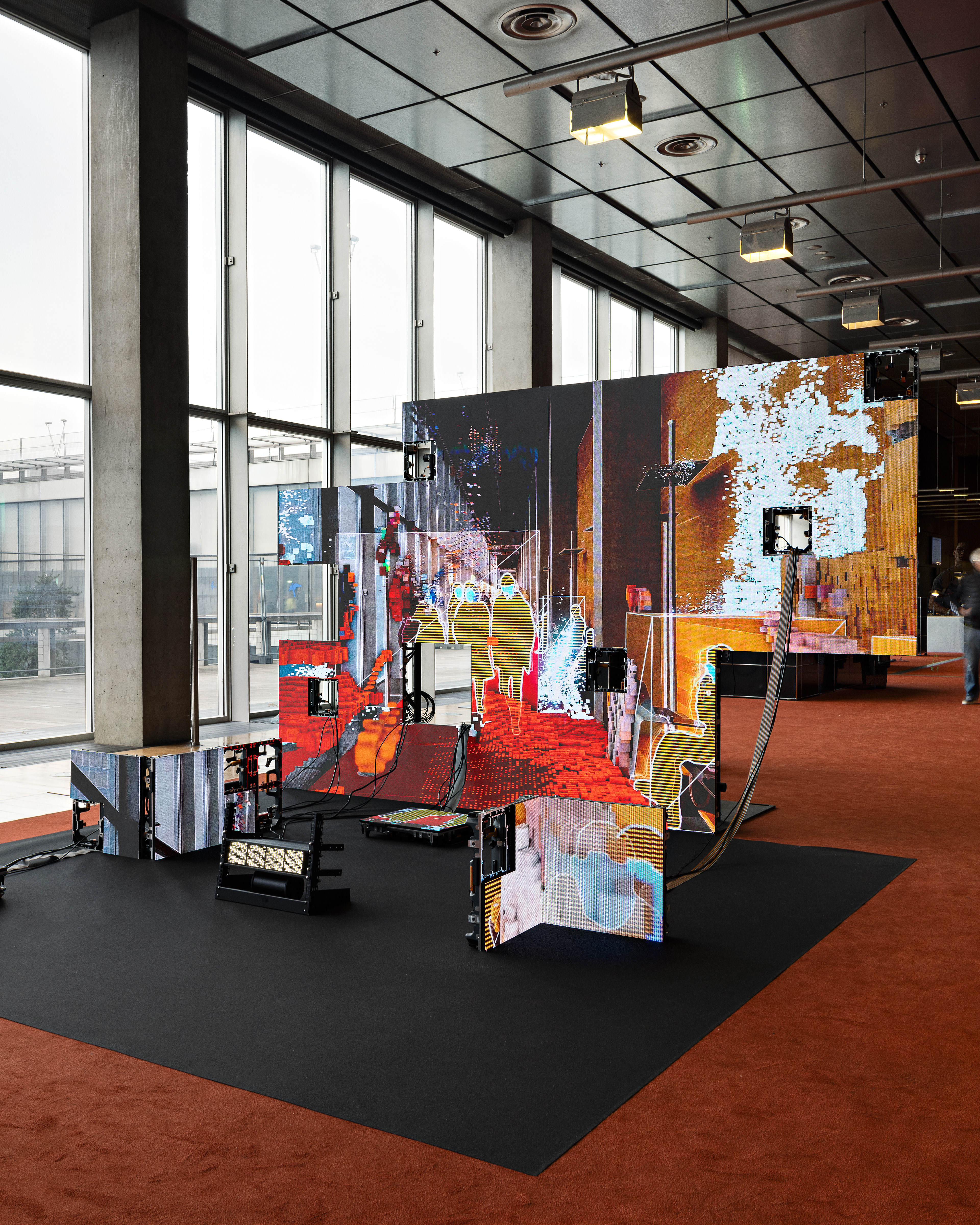

We combined volumetric captures, AI-driven segmentation, and real footage to create a fragmented digital point of view: a sense of how a machine might “see” reality. Using Meta’s Segment Anything 2 (SAM2), we detected and isolated people in video and dynamically integrated them into a shifting volumetric environment. AI-generated masks continuously reshaped the 3D space, while custom shaders simulated the unstable perception of a robotic TAD system — as if the machine was actively trying to understand its connection to human presence.

Rather than presenting a single static volumetric state, the scene evolves, fluctuates, and recalibrates — mirroring an ongoing process of comprehension.

It was a privilege to introduce the work alongside Joelle Pineau, VP of AI Research at Meta, and to share it with Rachida Dati, French Minister of Culture.

Credits & Support

Created in collaboration with Mehdi Mejri and Meta, with support from the teams at Fisheye Immersive, BnF-Partenariats / Le Lab, and the French Ministry of Culture.

Technologies

Volumetric Capture / AI Segmentation / Real-Time Compositing / Custom Shader Systems / SAM2

Deep Diving at Meta Festival 2025